Create-a-talking-image-recognition-solution-with-Azure-IoT-Edge-Azure-Cognitive-Services

This project is maintained by gloveboxes

| Author | Dave Glover, Microsoft Cloud Developer Advocate |

|---|---|

| Solution | Creating an image recognition solution with Azure IoT Edge and Azure Cognitive Services |

| Platform | Azure IoT Edge |

| Documentation | Azure IoT Edge, Azure Custom Vision, Azure Speech Services, Azure Functions on Edge, Azure Stream Analytics, Azure Machine Learning Services |

| Video Training | Enable edge intelligence with Azure IoT Edge |

| Programming Language | Python |

| Date | August 2020 |

Solution introduction

Image Classification with Azure IoT Edge

There are lots of applications for image recognition but what I had in mind when developing this application was a solution for vision impaired people scanning fruit and vegetables at a self-service checkout.

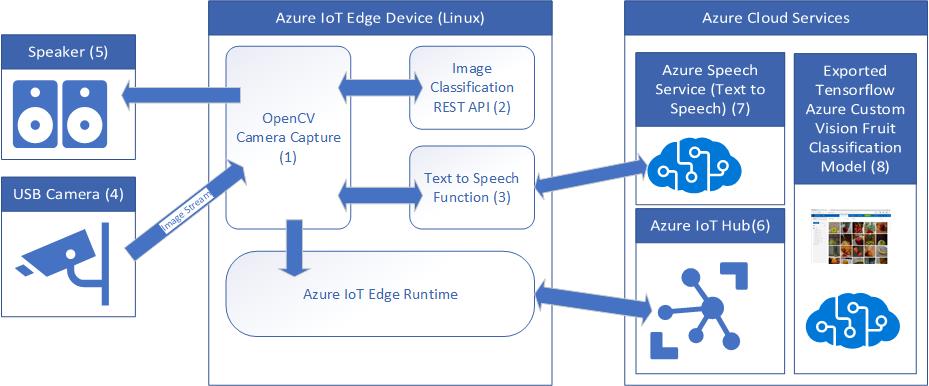

Solution Architecture

This solution will use three services, each with free tier services to whet your appetite.

The system identifies the item scanned against a pre-trained machine learning model, tells the person what they have just scanned, then sends a record of the transaction to a central inventory system.

The solution runs on Azure IoT Edge and consists of a number of services.

-

The Camera Capture Module handles scanning items using a camera. It then calls the Image Classification module to identify the item, a call is then made to the “Text to Speech” module to convert item label to speech, and the name of the item scanned is played on the attached speaker.

-

The Image Classification Module runs a Tensorflow machine learning model that has been trained with images of fruit. It handles classifying the scanned items.

-

The Text to Speech Module converts the name of the item scanned from text to speech using Azure Speech Services.

-

A USB Camera is used to capture images of items to be bought.

-

A Speaker for text to speech playback.

-

Azure IoT Hub (Free tier) is used for managing, deploying, and reporting Azure IoT Edge devices running the solution.

-

Azure Speech Services (free tier) is used to generate very natural speech telling the shopper what they have just scanned.

-

Azure Custom Vision service was used to build the fruit model used for image classification.

What is Azure IoT Edge

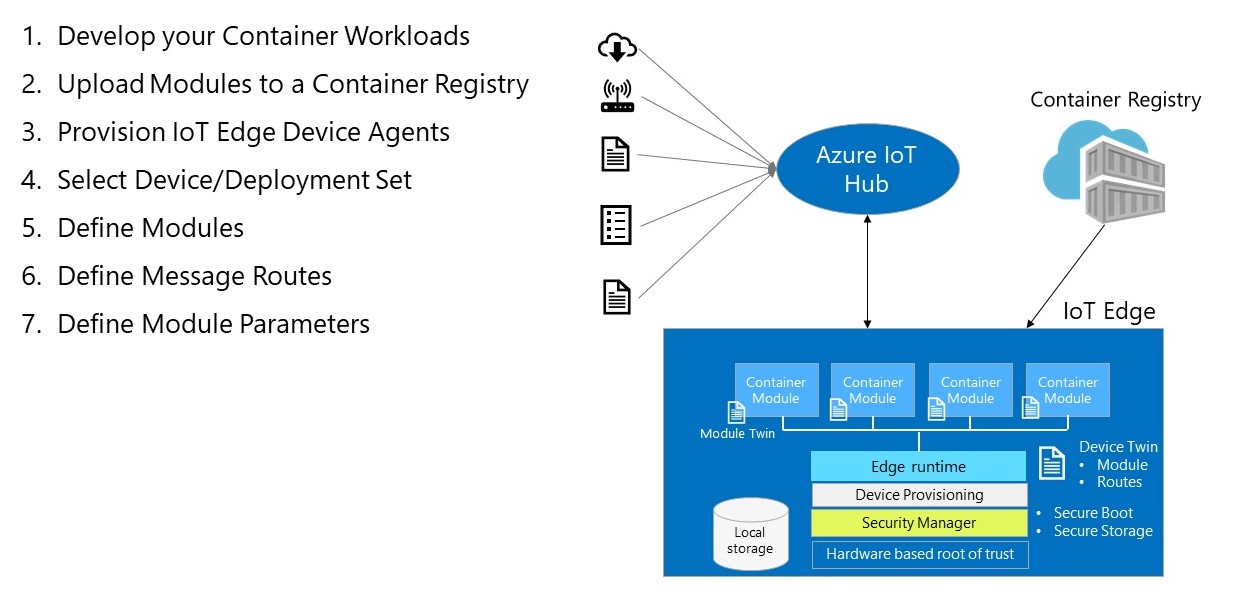

The solution is built on Azure IoT Edge which is part of the Azure IoT Hub service and is used to define, secure and deploy a solution to an edge device. It also provides cloud-based central monitoring and reporting of the edge device.

The main components for an IoT Edge solution are:-

-

The IoT Edge Runtime which is installed on the local edge device and consists of two main components. The IoT Edge “hub”, responsible for communications, and the IoT Edge “agent”, responsible for running and monitoring modules on the edge device.

-

Modules. Modules are the unit of deployment. Modules are docker images pulled from a registry such as the Azure Container Registry, or Docker Hub. Modules can be custom developed, built as Azure Functions, or as exported services from Azure Custom Vision, Azure Machine Learning, or Azure Stream Analytics.

-

Routes. Routes define message paths between modules and with Azure IoT Hub.

-

Properties. You can set “desired” properties for a module from Azure IoT Hub. For example, you might want to set a threshold property for a temperature alert.

-

Create Options. Create Options tell the Docker runtime what options to start the module with. For example, you may wish to open ports for REST APIs or debugging ports, define paths to devices such as a USB Camera, set environment variables, or enable privilege mode for certain hardware operations. For more information see the Docker API documentation.

-

Deployment Manifest. The Deployment Manifest pulls everything together and tells the Azure IoT Edge runtime what modules to deploy, from where, plus what message routes to set up, and what create options to start each module with.

Azure IoT Edge in Action

Solution Architectural Considerations

So, with that overview of Azure IoT Edge here were my considerations and constraints for the solution.

-

The solution should scale from a Raspberry Pi (running Raspberry Pi OS Linux) on ARM32v7, to my Linux desktop development environment, to an industrial capable IoT Edge device such as those found in the Certified IoT Edge Catalog.

-

The solution needs camera input, I used a USB Webcam for image capture as it was supported across all target devices.

Let’s get started

- Module 1: Create an Azure IoT Hub

- Module 2: Install Azure IoT Edge on your Raspberry Pi

- Module 3: Set up your development environment

- Module 4: Create Azure Cognitive Services

- Module 5: Build and deploy the solution

- Module 6: Build and deploy the solution